Solutions in Science 2025

SinS 2025: The hype of AI and its impact on the business of the life sciences

Jul 18 2025

Artificial intelligence (AI) is already transforming scientific research and the development of novel pharmaceutical compounds, but its successful implementation requires more than just technological enthusiasm, according to Santiago Domínguez, President of SciY and President of the Software Division at Bruker BioSpin.

Speaking at the Solutions in Science 2025 conference in Brighton, United Kingdom, Domínguez outlined both the opportunities and limitations of AI adoption in the life sciences, drawing on his experience of enabling digital workflows through accessible, interoperable and analytics-ready data.

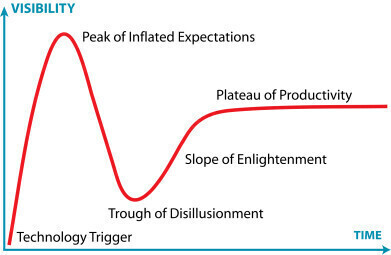

“AI is no longer a speculative opportunity but an actively evolving reality,” said Domínguez. He explained that, like personal computing and the internet before it, AI is progressing through the ‘hype cycle’ – a framework developed by the American research and advisory firm Gartner, Inc. for considering business applications for emerging technologies. The hype cycle describes the maturity of an emerging tech and AI is beginning to climb the so-called ‘slope of enlightenment’ towards tangible productivity (see graphic).

Domínguez noted that when disillusionment arises at senior leadership level it is often following failed deployments which were not rooted in a technology’s limitations but rather in unrealistic expectations and poor data readiness. He pointed to AI’s reliance on structured, annotated and standardised data – ‘AI-ready data’ – but reported that 57% of organisations have conceded in recent surveys that their data is not suitable for AI. He suggested that the remainder may be overestimating their preparedness.

Domínguez identified AI agents and AI-ready data as the two most rapidly advancing domains within the life sciences. In particular, he stated that pharmaceutical companies have taken a leading role, establishing billion-dollar partnerships with algorithm-focused start-ups that possess no scientific assets of their own. Domínguez argued that these partnerships expose a cultural divide: established pharmaceutical companies often work with paper-based legacy systems, whereas AI-native companies tend to lack access to experimental data or domain-specific expertise. Bridging this gap will be critical to maximise the impact AI can bring to bear on the industry.

Domínguez described for he has conducted a personal analysis of the formal annual reports of major companies across the chemicals, pharmaceuticals, and food and beverage sectors, concluding that all now cite AI as a strategic priority over the next five years. Although some of this activity may reflect industry ‘bandwagoning’, it certainly signals a broad recognition of AI’s burgeoning relevance. Hiring practices reinforce this point, with pharmaceutical companies increasingly recruiting large numbers of data scientists, even amid wider restructuring programmes.

Domínguez further said that AI is already accelerating research and development (R&D) timelines. One pharmaceutical company, he noted, has already reduced its drug development cycle from ten years down to seven and is targeting three years. He stated that this acceleration is vital for the expansion of personalised medicine or in the case of rare diseases, where patients cannot wait [the current development period of a] decade for these tailored treatments. He also highlighted the environmental advantages of simulating experiments in silico, by reducing overall levels of consumption of single use solvents and reagents.

He cited examples where AI has already delivered measurable gains. In drug discovery, AI is being used to identify and optimise candidate compounds with reduced reliance on physical screening processes. In biomarker research, AI tools had helped scientists to mine complex datasets to identify diagnostic or therapeutic targets. Models are also being applied to predict pharmacokinetic behaviour – absorption, distribution, metabolism and excretion – in early-stage trials, reducing the need for conventional testing methodologies.

Natural language processing tools have streamlined regulatory report preparation, cutting timelines from weeks to [literally] minutes, while predictive models are improving laboratory efficiency by forecasting equipment maintenance needs to avoid unplanned downtime due to machine failures.

Domínguez also presented several case studies that have exemplified AI’s practical impact. Novartis, for example, had reduced the time taken to compile clinical trial reports from 12 weeks per person to a mere ten minutes. GlaxoSmithKline has automated substantial parts of its Investigational New Drug application process, lowering the workload from six people working over two months to one person working for just four hours.

Away from the regulatory field, Insilico Medicine has identified a compound now undergoing Phase 2 trials which was developed entirely through an AI-driven design process. Meanwhile, AI developed by TriNetX has matched oncology patients to suitable clinical trials with 92% accuracy, based on genomic and phenotypic data. In cardiovascular medicine, a Cambridge-based start-up in the UK is replacing invasive angiograms with AI-interpreted computed tomography scans. And in manufacturing, one firm has shortened the drug batch release process from 30 days to under 90 minutes.

He also discussed the emergence of quantum chemical simulations that can now model more than 1.5 trillion reactions per minute – a level of throughput previously unimaginable using traditional laboratory experiments.

Despite this momentum, Domínguez cautioned that several persistent barriers continue to hinder implementation. Chief among them is the fragmentation of data formats: one client of his reportedly uses more than 11,000 unique proprietary data formats within its R&D infrastructure, preventing effective data access and analysis. Many organisations operate multiple Laboratory Information Management Systems (LIMS) – which are often incompatible with one another – and have placed obstruction to data integration and unified insights.

Cultural resistance with organisations also remains a formidable obstacle. Domínguez cited a study in The Lancet which found that although 97% of neurologists acknowledged AI’s superior performance in some diagnostic tasks, 93% still declined to use AI in their practice. He further highlighted scalability as a technical challenge, noting that Bruker has measured data flows of more than 120 terabytes per day from one large pharmaceutical operation – triple the daily volume of data generated by NASA – with the regulatory and compliance requirements for long-term storage adding further complexity.

To address these issues, Domínguez outlined several best practices. He urged organisations to begin with business value rather than technology. They should apply AI in workflows that are expensive, inefficient or slow, and focusing on these use cases where AI can deliver demonstrable returns. He recommended consolidating and standardising data formats, centralising access, and adopting FAIR principles. Integration, he said, must extend across inventory management, scheduling, LIMS and other systems if laboratories are to benefit from automation.

Cloud-based infrastructure will also be essential to support the multi-terabyte data volumes generated by modern workflows. Domínguez stressed the importance of embedding application programming interfaces – APIs – to allow AI to automatically transition between datasets and across platforms. Systems should be designed to support open standards and the ease of use of third-party tools. Without robust metadata, even the most extensive datasets can be rendered ineffective. Perhaps most importantly, he urged organisations to bring scientists and technicians along on this journey, highlighting that user resistance could long persist unless the benefits of AI become personally tangible to the individual in the workplace.

Domínguez concluded that AI in science is no longer speculative, but its successful deployment is far from guaranteed. Realising the full benefits – in speed, sustainability, cost-efficiency and scientific discovery – demands a foundation of data discipline, cross-functional collaboration and cultural change. With the right infrastructure and leadership, laboratories can transition from places of confirmation to engines of intelligent, automated and accelerated experimentation.

Digital Edition

Lab Asia Dec 2025

December 2025

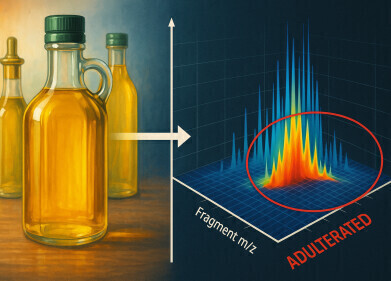

Chromatography Articles- Cutting-edge sample preparation tools help laboratories to stay ahead of the curveMass Spectrometry & Spectroscopy Articles- Unlocking the complexity of metabolomics: Pushi...

View all digital editions

Events

Jan 21 2026 Tokyo, Japan

Jan 28 2026 Tokyo, Japan

Jan 29 2026 New Delhi, India

Feb 07 2026 Boston, MA, USA

Asia Pharma Expo/Asia Lab Expo

Feb 12 2026 Dhaka, Bangladesh