News

How Does Thermal Imaging Work?

Jul 25 2014

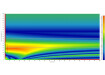

Thermal imaging produces images using the infrared part of the electromagnetic (EM) spectrum. Infrared is between visible light and microwaves in the EM spectrum.

What Is Infrared?

All objects with a temperature above absolute zero emit radiation as a result of the thermal motion of their molecules. It is the rotational and vibration changes in molecules that emit infrared radiation.

Objects emit radiation over a wide range of wavelengths with a link between the temperature and the intensity of the radiation at different wavelengths. As the temperature of an object increases, the thermal motion of the molecules also increases. Infrared radiation has a wavelength range of 700 nm to 1 mm - the visible range is 400-700 nm.

How to Detect Infrared

Infrared can be detected in the same way that a digital camera detects light. A lens focuses the infrared radiation onto a detector. The detection triggers an electrical response, which is then processed. After processing takes place, an image is formed called a thermograph.

The detection of infrared radiation relies on the detector seeing a difference in infrared intensity between an object and its surroundings. This difference will be due to:

- The temperature difference between the object and its surroundings, and:

- How well the object, and its surroundings, can emit infrared. This property is known as an object’s emissivity.

Lenses and Detectors

Because standard camera lenses are poor at transmitting infrared, the lenses of thermal imaging devices must be made from a different material that is transparent to infrared radiation. For high quality thermal imaging lenses devices with semiconductor properties are used in the lens. Other materials such as polymers can be designed and used as infrared lenses offering lower cost options.

Detectors in thermal imaging devices come in two basis types: cooled and uncooled. As their names suggest, one operates at colder temperatures than the other.

Cooled Detectors

Cooled detectors operate at a temperature much lower than the surrounding area (typically at 60-100 K). This is because the semiconductor materials used in cooled detectors are very sensitive to infrared radiation. Consequently, if the devices are not cooled, the detectors would be flooded with radiation from the device itself. When the detector absorbs infrared small changes occur in the charge conduction of the semiconductor. These changes are processed into the infrared image or thermograph. Cameras with cooled detectors are designed to detect very small differences in infrared temperature at a high resolution.

Uncooled Detectors

Uncooled detectors operate at ambient temperatures. These detectors detect the incoming heat radiation that causes changes to the electrical properties of the detector. Uncooled detectors are not as sensitive as cooled detectors and are cheaper to produce and operate.

Infrared cameras typically produce a monochrome image. The detectors do not distinguish between wavelengths of the incoming radiation, only the intensity of the radiation. For a look at what goes into making a high quality thermograph have a look here: Thermography Shows why Champagne Should be Poured Differently.

Digital Edition

Lab Asia Dec 2025

December 2025

Chromatography Articles- Cutting-edge sample preparation tools help laboratories to stay ahead of the curveMass Spectrometry & Spectroscopy Articles- Unlocking the complexity of metabolomics: Pushi...

View all digital editions

Events

Jan 21 2026 Tokyo, Japan

Jan 28 2026 Tokyo, Japan

Jan 29 2026 New Delhi, India

Feb 07 2026 Boston, MA, USA

Asia Pharma Expo/Asia Lab Expo

Feb 12 2026 Dhaka, Bangladesh