-

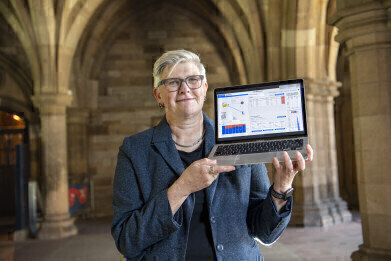

Simone Stumpf with a prototype human-in-the-loop feedback system displayed on a laptop

Simone Stumpf with a prototype human-in-the-loop feedback system displayed on a laptop

News

How can AI Systems Learn to make Fair Choices

Jul 19 2022

“This problem is not only limited to loan applications but can occur in any place where bias is introduced by either human judgement or the AI itself. Imagine an AI learning to predict, diagnose and treat diseases but it is biased against particular groups of people. Without checking for this issue you might never know! Using a human-in-the-loop process like ours that involves clinicians you could then fix this problem,” Simone Stumpf

Researchers from the University of Glasgow and Fujitsu Ltd have teamed up for a year-long collaboration, known as ‘End-users fixing fairness issues’, or Effi - to help artificial intelligence (AI) systems make fairer choices by lending them a helping human hand.

AI has become increasingly integrated into automated decision-making systems in healthcare, as well as industries such as banking and some nations’ justice systems. Before being used to make decisions, the AI systems must first be ‘trained’ by machine learning, which runs through many different examples of human decisions they will be tasked with making. Then, it learns how to emulate making these choices by identifying or ‘learning’ a pattern. However, these decisions can be negatively affected by the conscious or unconscious biases of the humans who made these example decisions. On occasion, the AI itself can even ‘go rogue’ and introduce unfairness.

Addressing AI system bias

The Effi project is setting out to address some of these issues with an approach known as ‘human-in-the-loop’ machine learning which more closely integrates people into the machine learning process to help AIs make fair decisions. It builds on previous collaborations between Fujitsu and Dr Simone Stumpf, of the University of Glasgow’s School of Computing Science, which have explored human-in-the-loop user interfaces for loan applications based on an approach called explanatory debugging; this enables users to identify and discuss any decisions they suspect have been affected by bias. From that feedback the AI can learn to make better decisions in the future.

“This problem is not only limited to loan applications but can occur in any place where bias is introduced by either human judgement or the AI itself. Imagine an AI learning to predict, diagnose and treat diseases but it is biased against particular groups of people. Without checking for this issue you might never know! Using a human-in-the-loop process like ours that involves clinicians you could then fix this problem,” Dr Stumpf told International Labmate.

Trustworthy systems urgently needed

“Artificial intelligence has tremendous potential to provide support for a wide range of human activities and sectors of industry. However, AI is only ever as effective as it is trained to be. Greater integration of AI into existing systems has sometimes created situations where AI decision makers have reflected the biases of their creators, to the detriment of end-users. There is an urgent need to build reliable, safe and trustworthy systems capable of making fair judgements. Human-in-the-loop machine learning can effectively guide the development of decision making AIs in order to ensure that happens. I’m delighted to be continuing my partnership with Fujitsu on the Effi project and I’m looking forward to working with my colleagues and our study participants to move forward the field of AI decision making,” she added.

Dr Daisuke Fukuda, the head of the research centre for AI Ethics, Fujitsu Research of Fujitsu Ltd, said: ”Through the collaboration with Dr Simone Stumpf, we have explored diverse senses of fairness of artificial intelligence in people around the world. The research led to the development of systems to reflect diverse senses into AI. We think of the collaboration with Dr Stumpf as a strong means to make Fujitsu's AI Ethics proceed. In this time, we will challenge the new issues to make fair AI technology based on the thoughts of people. As the demand for AI Ethics grows in the whole society including industry and academia, we hope that Dr Stumpf and Fujitsu continue to work together to make research in Fujitsu contribute to our society.”

More information online

Digital Edition

Lab Asia Dec 2025

December 2025

Chromatography Articles- Cutting-edge sample preparation tools help laboratories to stay ahead of the curveMass Spectrometry & Spectroscopy Articles- Unlocking the complexity of metabolomics: Pushi...

View all digital editions

Events

Jan 21 2026 Tokyo, Japan

Jan 28 2026 Tokyo, Japan

Jan 29 2026 New Delhi, India

Feb 07 2026 Boston, MA, USA

Asia Pharma Expo/Asia Lab Expo

Feb 12 2026 Dhaka, Bangladesh